I’ve recently been doing a tonne of usability testing, and along the way I’ve learned some stuff! I’ve also noticed that usability testing isn’t particularly well understood, so this is a summary of some of the important points and a collection of resources I’ve put together if you want to learn more about it.

Qualitative or Quantitative?

tldr: One is not necessarily better than the other, but they serve different purposes. People often assume that quantitative is ‘better’ because it is statistically relevant, but… is it? Quantitative testing helps to identify that there IS a problem, but it doesn’t tell you what the problem is. It’s good for comparison, but it’s also wayyyyyy more expensive and time consuming. For a statistically significant study you need around 20 users, and you will probably need multiple rounds of testing (because you’re probably using it to compare a before and after, or one site against another).

On the flip side, qualitative testing focuses on collecting insights and anecdotes about how people use something, and helps to identify WHAT the problem is, rather than just that there is one. Testing with only 5 participants will typically uncover ~85% of usability problems. Iterative testing with smaller groups can be way more practical that multiple large scale studies!

What is a qualitative usability test?

From my perspective, this is the important bits of a qualitative study:

- Recruit suitable participants

- Work out what you want to test (research questions)

- Work out how to test those things (research tasks)

- Facilitate and record

- Analyse results and iterate

Recruiting participants

We are super lucky that most of our clients are non-profits. Working in the charity space, we are able to recruit volunteer participants, but it’s still one of the most time consuming parts of the study. We have a standard screening questionnaire that we tweak as needed, but you want to think about things like age, gender, employment status, tech proficiency, language and any red flags. Figure out your ideal participant based on who is most likely to use your site (or struggle using it).

For some of the questions, you might need to get creative! For example rather than asking ‘how comfortable are you using different types of technology’ or ‘how tech savvy are you’ we might ask:

- How do you regularly access the internet? Tell us more about the device(s) you might use to access a website. (The language they use can be very informative)

- Which of the following tasks would you be confident helping a friend or family member with? (E.g. installing a piece of software, or registering a domain name)

For profit testing can be a bit trickier, because you are typically expected to reimburse your testers. Having said that, you can usually leverage existing networks to find friends, family or colleagues who are unfamiliar with your project that you can cajole into helping. If you have a product or service you can offer, consider offering an incentive such as going into a draw for a really good prize, or offering a voucher to use with your business. While the industry standard is to offer payment, if you are going to do this make sure that you aren’t a cheapskate – you could do more harm than good! That conversation is a bit too long for this blog post, but I highly recommend giving Predictably Irrational by Dan Ariely a read to find out why.

Research Questions

Research questions are different from tasks that we give to the user. Before we work out what tasks we intend to give our participants, we need to work out our research goals.

Some prompts I use to help identify research questions include:

- What are the most important things we want people to do, see, or find, on the site?

- What are we most concerned about?

- What did we ‘um’ and ‘ah’ about during discovery/development?

- What assumptions did we make that weren’t evidence based?

I typically aim for between 5 and 10 research questions per study. These should describe the thing you want to test, and will be refined into research tasks in the next step.

Research Activities (tasks)

Research Tasks are what we ask the participant to do. The way that we word our tasks is really important, because it will change the way that our participants approach those tasks.

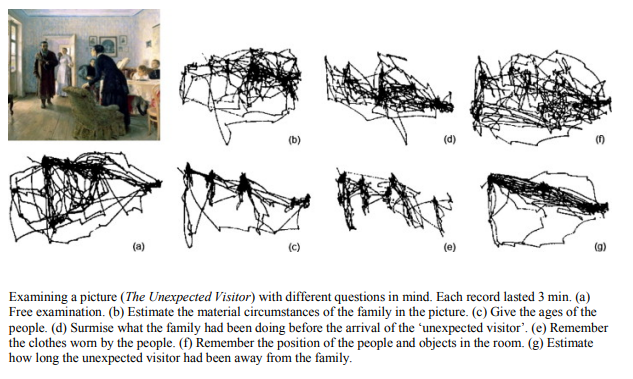

When I was completing my Usability Testing course with Nielsen Norman Group, I really liked this example that they gave about the importance of how a research task is worded.

Basically we want to achieve our goal (the research question) without leading or guiding our participant, but still making sure that the task is realistic and fair.

Facilitate (my favourite bit!)

I record my sessions using Fireflies.ai so that I can watch it back later and easily search for specific words or phrases. Each activity is sent to the participant one at a time, and (this is very important) we ask them to speak aloud and narrate their thought process. We avoid having spectators if possible (there’s no need, it’s recorded and the activities are organised ahead of time) and avoid talking to the participant during the activity.

I’ve also found it useful to ask the participants to do a ‘demo task’ first to get them used to this. Here’s an example I recorded with my friend Dee (thanks Dee!) for my WordCamp Asia presentation on usability testing:

After each activity, we ask the participant the same three questions:

- How easy or difficult was this activity to complete?

- How confident are you with your answer to question 1?

- How satisfied were you with doing the activity, and the result you got?

Question 2 is a weird one but is useful to identify when they didn’t actually understand the goal of the activity!

Analyse, Iterate.

Once I’ve done all of the planned sessions, I will create a ‘board’ for the project. This will have a column for each interface that was tested. For example, I might have a column for the header, one for the home page, one for the Services page, one for the footer… you get the idea. In each column I will add sticky notes with either quotes (eg. “”What am I doing wrong?” [when no results were found]) or with descriptions of what the user did (e.g. Assumed that keyword was required when using the search form).

I colour code my different participants sticky notes, so I can tell if one person had lots of feedback, or if it was widespread across all participants.

Finally, I review all of the feedback with my client and we work out actions based on the results. For example, we might change a label for something that was hard to find, or make it more prominent.

Getting Started

Usability testing is super interesting and valuable, but it’s also a bit scary. Honestly my advice is to just start, and don’t worry about it being perfect from day 1. Testing with even one person is better than testing with none – as my heroes at NN/g like to say, zero users give zero insights!

If you are interested in participating in a usability study for a charity, you can register your interest via our Mission Digital program here: https://missiondigital.com.au/become-a-usability-tester/

And of course, if you want someone to conduct testing on your behalf you can always reach out and hire me to do it for you!

Resources

https://www.nngroup.com/articles/usability-testing-101

https://www.nngroup.com/articles/why-you-only-need-to-test-with-5-users

https://www.goodreads.com/book/show/1713426.Predictably_Irrational